Mobius Labs

@Mobius_Labs

Followers

3K

Following

1K

Media

144

Statuses

398

Multimodal AI for the world's scale. Proponents of Open Source and Open Intelligence. https://t.co/1nC6r8hOrE for some of our recent work.

Berlin, Germany

Joined April 2018

RT @mobicham: Been playing with low-bit training this morning (3-bit, 2-bit, 1.58bit). Simple key trick to make it work: dumpen the gradien….

gist.github.com

GitHub Gist: instantly share code, notes, and snippets.

0

3

0

RT @mobicham: Simple and fast MXFP8 activation quant kernel:.✓ Padding-aware for arbitrary seq lens .✓ SM-aware unrolling to improve occup….

0

11

0

RT @mobicham: Here's how to write a fast MXFP4/NVFP4 dequant GEMV kernel for batch-size=1:.-Use a mapping + tl.gather to map the quant matr….

0

2

0

RT @Mobius_Labs: FP4 weights meets high accuracy: Logit‐distillation bias correction for MXFP4 & NVFP4. On Llama-3.1-8B recovers ≥99% relat….

mobiusml.github.io

0

5

0

FP4 weights meets high accuracy: Logit‐distillation bias correction for MXFP4 & NVFP4. On Llama-3.1-8B recovers ≥99% relative quality. Details at:

mobiusml.github.io

1

5

38

RT @mobicham: GemLite runs fast on the MI300X, but there's still plenty of performance left to unlock

0

1

0

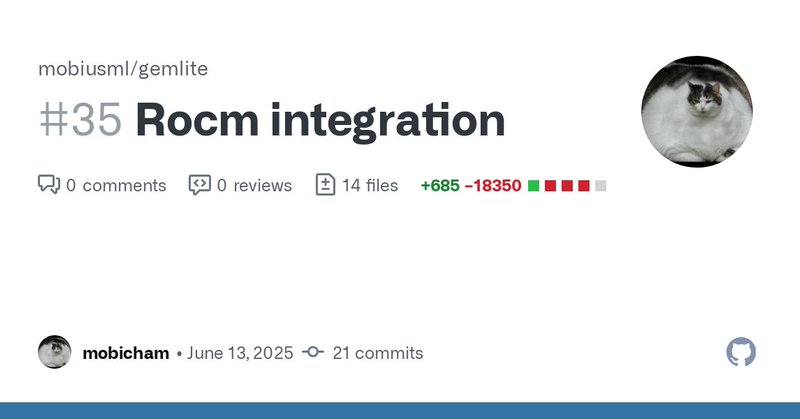

RT @mobicham: rocm support added 👀, mainly focusing on the MI300X .

github.com

Adds rocm support, focusing ont he MI300X

0

2

0

RT @mobicham: Damn. Luckily, we have HQQ that only takes 5 secs to quantize an 8B model, super useful to get started right away with any mo….

0

3

0

RT @mobicham: GemLite 0.4.7 is out 🔥. It boosts performance by 5-10 tokens/sec end-2-end by using an interesting trick which might seem wei….

github.com

Fast low-bit matmul kernels in Triton. Contribute to mobiusml/gemlite development by creating an account on GitHub.

0

15

0

RT @mobicham: @main_horse @gaunernst Llama3.1 8B running A16W4 with FP16 accumulation on the 5090 RTX

0

1

0

RT @mobicham: We just made inference 1.5x faster with larger batch-sizes compared to last week 🤯 - work in progress

0

5

0

GemLite now supports @vllm_project vllm V1, which brings up to 1.25x faster inference speed vs V0! .

github.com

This release is mainly focusing on vllm V1 (torch.compile) support. What's Changed Add support for vllm compile by @mobicham in #32 Full Changelog: 0.4.5...0.4.6

1

3

12

RT @mobicham: Well optimized Triton kernels can perform very well end-2-end, even competing with highly optimized kernels like Marlin. http….

0

4

0

RT @mobicham: GemLite is significantly outperforming the default A16W4 vLLM kernel on the MI300X 🚀

0

2

0

Initial attempts on brining Gemlite to @AMD MI300X.

Hitting ~200 tokens/sec with Llama3-8B (bs=1) using GemLite (after changes) on the AMD MI300X. Performance lands between the A100 and H100 — given the price point, the MI300X is shaping up to be a very compelling inference option!

0

0

5

RT @mobicham: So I run evaluation on Gemma 3 12B QAT vs. HQQ. HQQ takes a few seconds to quantize the model and outperforms the QAT versi….

0

12

0

Thanks to bfloat16 support, GemLite now runs models like Google’s Gemma3—avoiding the usual accuracy loss from fp16 conversions.

huggingface.co

0

0

6