Krzysztof Choromanski

@kchorolab

Followers

415

Following

18

Media

5

Statuses

43

Mind of a scientist. Heart of a mathematician. Soul of a pianist. Head of the Vision Team @ Google DeepMind Robotics and Adjunct Assistant Professor @Columbia.

Joined October 2020

Very proud of our Google DeepMind Robotics Team ! Big milestone and an accomplishment of the entire Team.

✨🤖 Today our team is so excited to bring Gemini 2.0 into the physical world with Gemini Robotics, our most advanced AI models to power the next generation of helpful robots. 🤖✨ Check it out! https://t.co/RDevxEOKKz And read our blog: https://t.co/UGJSjVwLRm We are looking

0

0

1

This is so far a pretty unusual year. Two best award papers in two top Robotics conferences: ICRA 2024 and IROS 2024, within last 5 months. I am blessed to work with amazing people...

3

1

12

"Embodied AI with Two Arms: Zero-shot Learning, Safety and Modularity" winning The Best RoboCup Paper Award at #IROS2024 in Abu Dhabi, UAE. Congratulations to the Team !

0

0

2

SARA-RT winning The Best Robotic Manipulation Paper Award at #ICRA2024 in Yokohama, the biggest Robotics conference in history (6K+ attendees). The only paper at the conference with final presentation complemented with live robotic demo. Congratulations Team !

2

0

14

SARA-RT (fast-inference Robotics Transformers) presented at the Best Papers in Robotics Manipulation Session at #ICRA2024 in Yokohama, with live robotic demo from New York. Great job Team !

2

1

12

New @GoogleDeepMind blog post covering 3 recent works on AI + robotics! 1. Auto-RT scales data collection with LLMs and VLMS. 2. SARA-RT significantly speeds up inference for Robot Transformers. 3. RT-Trajectory introduces motion-centric goals for robot generalization.

How could robotics soon help us in our daily lives? 🤖 Today, we’re announcing a suite of research advances that enable robots to make decisions faster as well as better understand and navigate their environments. Here's a snapshot of the work. 🧵 https://t.co/rqOnzDDMDI

6

22

132

To reach a future where robots could help people with tasks like cleaning and cooking, they must learn to make accurate choices in a fast-changing world. By building on our RT-1 and RT-2 models, these projects take us closer to more capable machines. ↓

3

5

63

🔵 When we applied SARA-RT to our state-of-the-art RT-2 model, the best versions were 10.6% more accurate & 14% faster after being given a short history of images. We believe this is the first scalable attention mechanism to offer computational improvements with no quality loss.

3

5

44

1️⃣ Our new system SARA-RT converts Robotics Transformer models into more efficient versions using a novel method: “up-training.” This can reduce the computational requirements needed for on-robot deployment, increasing speed while preserving quality. https://t.co/rqOnzDDMDI

2

9

63

To produce truly capable robots, two fundamental challenges must be addressed: 🔘 Improving their ability to generalize their behavior to novel situations 🔘 Boosting their decision-making speed We deliver critical improvements in both areas. ↓

2

9

59

We are shaping the future of advanced Robotics. Introducing AutoRT, SARA-RT and RT-Trajectories to address the notoriously difficult problems of generalization and speed of Transformer-based models in Robotics. https://t.co/uzwQGxhCSe

How could robotics soon help us in our daily lives? 🤖 Today, we’re announcing a suite of research advances that enable robots to make decisions faster as well as better understand and navigate their environments. Here's a snapshot of the work. 🧵 https://t.co/rqOnzDDMDI

0

0

6

The concept of the associative memory is an elegant idea, strongly motivated by how we humans memorize and with a beautiful mathematics behind it. Now there is a NeurIPS workshop on it :)

amhn.vizhub.ai

Associative Memory & Hopfield Network Worshop @NeurIPS 2023. Discuss the latest multidisciplinary developments in Associative Memory and Hopfield Networks. Imagine new tools built around memory as...

0

1

14

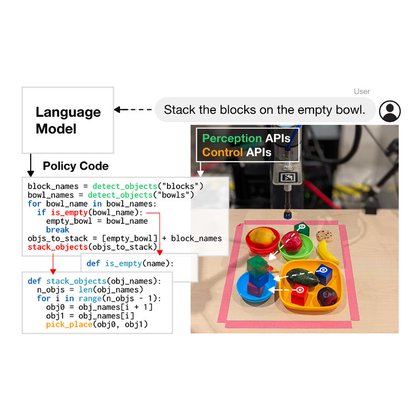

PaLM-E or GPT-4 can speak in many languages and understand images. What if they could speak robot actions? Introducing RT-2: https://t.co/MhgZqCRfOC our new model that uses a VLM (up to 55B params) backbone and fine-tunes it to directly output robot actions!

16

115

574

Multi-modal Transformers in action on robots give robots unprecedented capabilities to reason about the world. Check out RT-2, our new robotic model that makes it happen !

Excited to present RT-2, a large unified Vision-Language-Action model! By converting robot actions to strings, we can directly train large visual-language models to output actions while retaining their web-scale knowledge and generalization capabilities! https://t.co/27sBueV42q

1

0

11

Excited to present RT-2, a large unified Vision-Language-Action model! By converting robot actions to strings, we can directly train large visual-language models to output actions while retaining their web-scale knowledge and generalization capabilities! https://t.co/27sBueV42q

Today, we announced 𝗥𝗧-𝟮: a first of its kind vision-language-action model to control robots. 🤖 It learns from both web and robotics data and translates this knowledge into generalised instructions. Find out more: https://t.co/UWAzrhTOJG

0

14

76

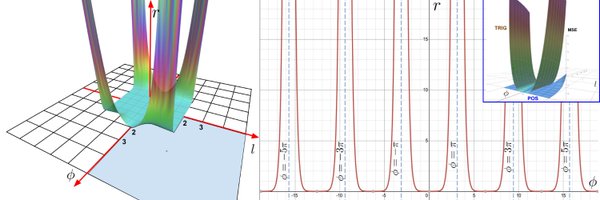

Presenting Performer-MPC, an end-to-end learnable robotic system that combines several mechanisms to enable real-world, robust, and adaptive robot navigation with real-time, on-robot transformers. Read more about this system → https://t.co/UfIiV8HBgP

13

39

182

We firmly believed in 2020 that algorithmic advances in the attention-mechanism computation constitute a gateway to a broad adoption of Transformers in Robotics and show it now: 8.3M-parameter model running with < 10ms latency in real.

0

0

2

Performers, introduced by us in 2020, deployed on robots for efficient navigation. https://t.co/hlkbRCUe35

research.google

Posted by Krzysztof Choromanski, Staff Research Scientist, Robotics at Google, and Xuesu Xiao, Visiting Researcher, George Mason University Despite...

1

0

5

An excellent summary of some of the achievements of our Team in 2022: https://t.co/kfjv3Mnqup. Performers were recently applied to make on-robot inference of Transformer-based models feasible. Is <10ms on-robot latency for 8.3M-parameter Transformer model possible ? Yes, it is.

research.google

Posted by Kendra Byrne, Senior Product Manager, and Jie Tan, Staff Research Scientist, Robotics at Google (This is Part 6 in our series of posts co...

1

0

7

How about foundation models having a nice conversation ?

With multiple foundation models “talking to each other”, we can combine commonsense across domains, to do multimodal tasks like zero-shot video Q&A or image captioning, no finetuning needed. Socratic Models: website + code: https://t.co/Zz0kbV5GTQ paper: https://t.co/NpsW61Ka3s

1

2

8