Explore tweets tagged as #WebLLM

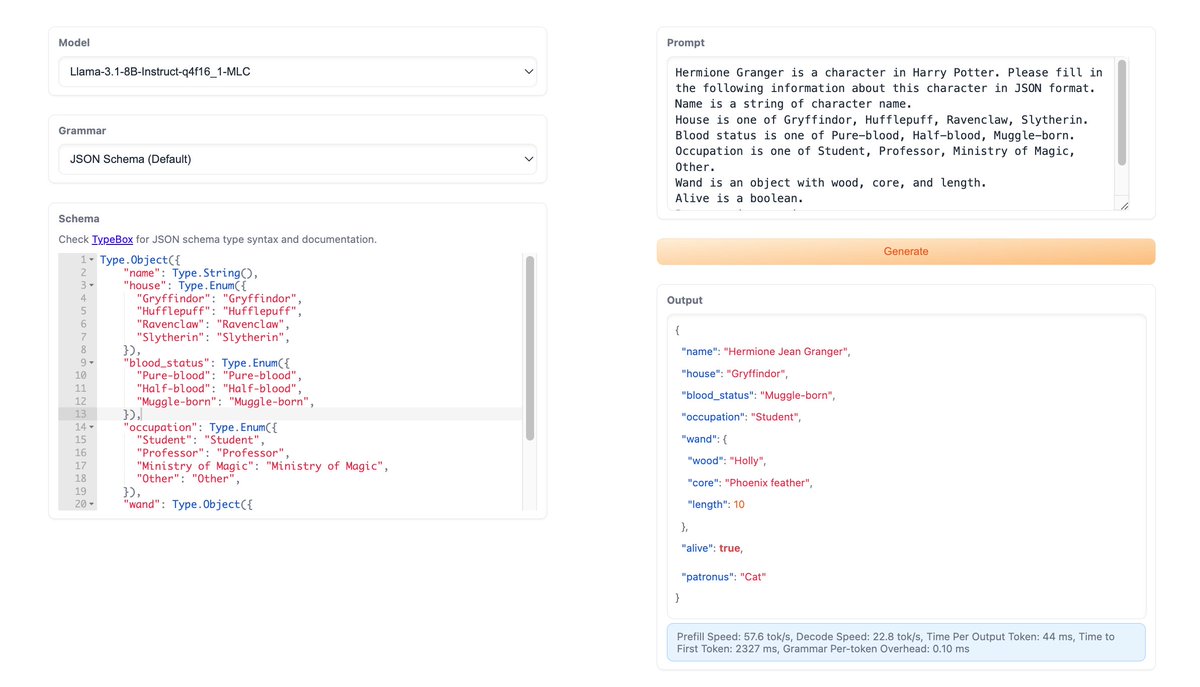

wip: allowing local models via WebLLM for OpenBB Copilot 🤯🤯🤯. all data stays in your control without leaving the browser. small models are becoming powerful. WebLLM project from @charlie_ruan is just mind blowing, took me a few minutes to integrate. even supports streaming

2

7

26