Explore tweets tagged as #UnityCatalog

The #OneLake release train keeps rolling. #Databricks #UnityCatalog mirroring via shortcuts is now generally available! #MicrosoftFabric

0

11

33

BLOG: The Case for Apache Polaris as the Community Standard for Lakehouse Catalogs . READ HERE: . #ApacheIceberg #DeltaLake #UnityCatalog #ApachePolaris #DataLakehouse #DataEngineering

1

1

7

A data catalog might look like a thin layer, but as Sida Shen from @CelerData explains, it’s so much more. In this clip, Sida shares how catalogs go beyond simply storing metadata. They’re essential for managing authentication, authorization, and compliance across all your data

0

5

9

🚀 From Delta Lake to downstream ML pipelines — without Spark?. In this clip, Daniel Beach shares a real production use case where he used Polars inside an Apache Airflow worker to read from a @unitycatalog_io–backed #DeltaLake table, filter job-specific records, and dynamically

0

1

10

Unity Catalog is Opensource but has limited functionalities - wish we could have easily created a table using the DuckDB interface:. #opensource #unitycatalog.

1

0

1

🚀 Big news from #DataAISummit: Starburst now supports read + write with Unity Catalog—unlocking governed, cross-engine interoperability. Thanks to Databricks, our speakers & amazing customers!. 📖 Recap: #StarburstData #UnityCatalog #AI #DataAccess

0

1

3

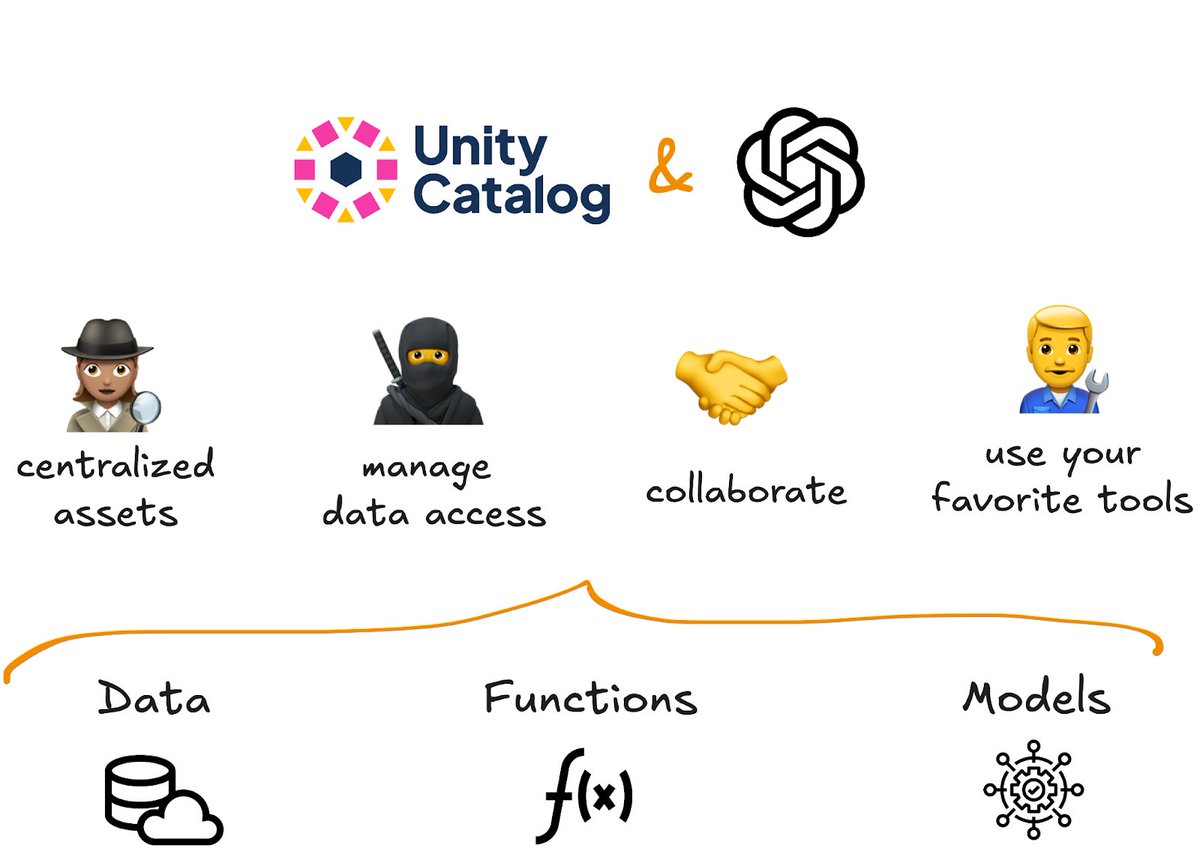

Unity Catalog ➕ OpenAI = 🤝. If you’re building AI applications with @OpenAI, you know the challenge: data, models, and functions scattered everywhere, each with their own access rules. 🤯 #UnityCatalog gives you a single place to manage your data and AI assets, making it easier

0

3

6

Everyone is talking about "Agentic AI" and data engineering being dead very shortly. Here I am trying to use these "amazing" #databricks tools like #unitycatalog and #mlflow to register some #spark ML models.

1

0

2

Daft was one of the first to natively integrate with @unitycatalog_io! But how do you use it together for modern multimodal workloads?. Kevin & Sammy dive into how Daft’s catalog API enables you to query Unity tables using both SQL and Python DataFrame.

1

1

2

Sparkで大量のデータを高速にバッチ処理できるPandas UDFがUnity Catalogで管理できるようになり、バッチPython UDFとして利用できるようになりました。ガバナンスの集中管理や共有が可能となります!. DatabricksのUnity CatalogバッチPythonユーザー定義関数 #UnityCatalog - Qiita

0

1

10

What a day! 🥰 Just found this issue in #UnityCatalog OSS repo 🤯. ➡️ (I immediately liked #PuppyGraph 😉)

0

0

3

Daft was one of the first to natively integrate with @unitycatalog_io. You can use Daft’s catalog API to query Unity tables using both SQL and Python DataFrame, such as filtering with a WHERE clause in a .sql() expression. Try it yourself and get started: pip install daft[unity]

1

2

2

Machine learning and AI workflows are full of experimentation—multiple training runs, fine-tuning, and constant iteration. But as models multiply, so does the need for robust governance. 🔒 With tools like @MLflow and Unity Catalog, you can:.🔹 Track and manage every model

0

4

5

Updating existing code for #UnityCatalog compatibility just got easier! . UCX is a toolkit for enabling Unity Catalog in your Databricks workspace. In this quick demo, learn how to:.- Execute essential UCX commands for code migration.- Adapt existing SQL and Python code to Unity

0

5

13

Introducing batch inference on Mosaic AI Model Serving: a simpler, faster and more scalable way to apply LLMs to large documents. With this release, run batch inference directly within your workflows – with full governance through #UnityCatalog.

1

6

31

Looking to build LLM-powered apps like chatbots, document summarizers, or data explorers without compromising on data governance? 🔐. Our latest blog shows you how to use #UnityCatalog with @llama_index to organize, secure, and efficiently query your data for AI use cases. Unity

0

0

1