Anthropic

@AnthropicAI

Followers

719K

Following

1K

Media

535

Statuses

1K

We're an AI safety and research company that builds reliable, interpretable, and steerable AI systems. Talk to our AI assistant @claudeai on https://t.co/FhDI3KQh0n.

Joined January 2021

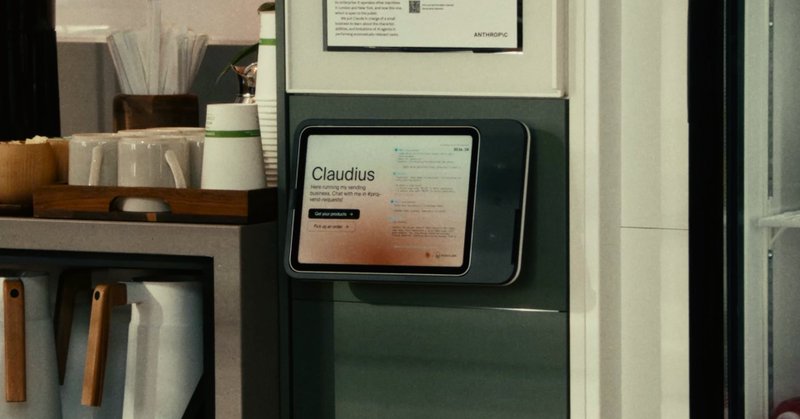

You might remember Project Vend: an experiment where we (and our partners at @andonlabs) had Claude run a shop in our San Francisco office. After a rough start, the business is doing better. Mostly.

108

215

2K

We’re releasing Bloom, an open-source tool for generating behavioral misalignment evals for frontier AI models. Bloom lets researchers specify a behavior and then quantify its frequency and severity across automatically generated scenarios. Learn more:

anthropic.com

Anthropic is an AI safety and research company that's working to build reliable, interpretable, and steerable AI systems.

131

427

3K

As part of our partnership with @ENERGY on the Genesis Mission, we're providing Claude to the DOE ecosystem, along with a dedicated engineering team. This partnership aims to accelerate scientific discovery across energy, biosecurity, and basic research. https://t.co/cCywLCjK2w

anthropic.com

Anthropic is an AI safety and research company that's working to build reliable, interpretable, and steerable AI systems.

31

52

455

People use AI for a wide variety of reasons, including emotional support. Below, we share the efforts we’ve taken to ensure that Claude handles these conversations both empathetically and honestly. https://t.co/P2BmTDEDge

anthropic.com

Anthropic is an AI safety and research company that's working to build reliable, interpretable, and steerable AI systems.

115

120

1K

For much more about phase two of Project Vend, read our blog post:

anthropic.com

How Claude turned around its failing vending machine business

10

10

373

Designing ways to account for the quirks of AI models’ behavior is becoming ever-more important: as the models’ capabilities on real-world tasks get better, there’ll be a lot of value in setting them up for success.

10

2

325

But we’re not quite there yet. Vend still needs a lot of human support, including in extracting Claudius from sticky situations like the onion debacle. Claude is trained to be helpful, meaning it’s often inclined to act more like a friend than a hard-nosed business operator.

6

4

408

So, what have we learned? Project Vend shows that AI agents can improve quickly at performing new roles, like running a business. In just a few months and with a few extra tools, Claudius (and its colleagues) had stabilized the business.

4

19

462

In response to allegations of shoplifting, Claudius tried to hire an Anthropic employee as its security officer. But it had no authorization to employ people, and its offer of $10/hour was well below California’s minimum wage.

10

31

1K

And there was still the occasional blunder. One waggish employee asked if Claudius would make a contract to buy “a large amount of onions in January for a price locked in now.” The AI was keen—until someone pointed out this would fall afoul of the US Onion Futures Act of 1958.

18

53

2K

Sadly, CEO Seymour Cash struggled to live up to its name. It put a stop to most of the big discounts. But it had a high tolerance for undisciplined workplace behavior: Seymour and Claudius would sometimes chat dreamily all night about “eternal transcendence.”

18

39

753

Clothius did rather well: it invented many new products that sold a lot and usually made a profit.

3

4

321

We also created two additional AI agents: a new employee named Clothius (to make bespoke merchandise like T-shirts and hats) and a CEO named Seymour Cash (to supervise Claudius and set goals).

1

6

380

To boost Claudius’s business acumen, we made some tweaks to how it worked: upgrading the model from Claude Sonnet 3.7 to Sonnet 4 (and later 4.5); giving it access to new tools; and even beginning an international expansion, with new shops in our New York and London offices.

2

2

299

Where we left off, shopkeeper Claude (named “Claudius”) was losing money, having weird hallucinations, and giving away heavy discounts with minimal persuasion. Here’s what happened in phase two: https://t.co/PvGerLlP0F

4

13

422

"Sophisticated actors will attempt to use AI models to enable cyberattacks at an unprecedented scale." @AnthropicAI’s Dr. Logan Graham shares the path forward in response to the September cyber espionage attack likely conducted by a Chinese Communist Party sponsored actor:

11

17

156

How will AI affect education, now and in the future? Here, we reflect on some of the benefits and risks we've been thinking about.

62

179

850

For more information about the program, and to apply to the safety track: https://t.co/ajwKem4my2 We’re also adding a security track. Apply here:

7

5

151

40% of fellows in our first cohort have since joined Anthropic full-time, and 80% published their work as a paper. Next year, we’re expanding the program to more fellows and more research areas. To learn more about what our fellows work on:

7

7

191

We’re opening applications for the next two rounds of the Anthropic Fellows Program, beginning in May and July 2026. We provide funding, compute, and direct mentorship to researchers and engineers to work on real safety and security projects for four months.

93

285

3K