Jude Wells

@_judewells

Followers

965

Following

2K

Media

72

Statuses

277

PhD student University College London: machine learning methods for structure-based drug discovery.

London

Joined March 2009

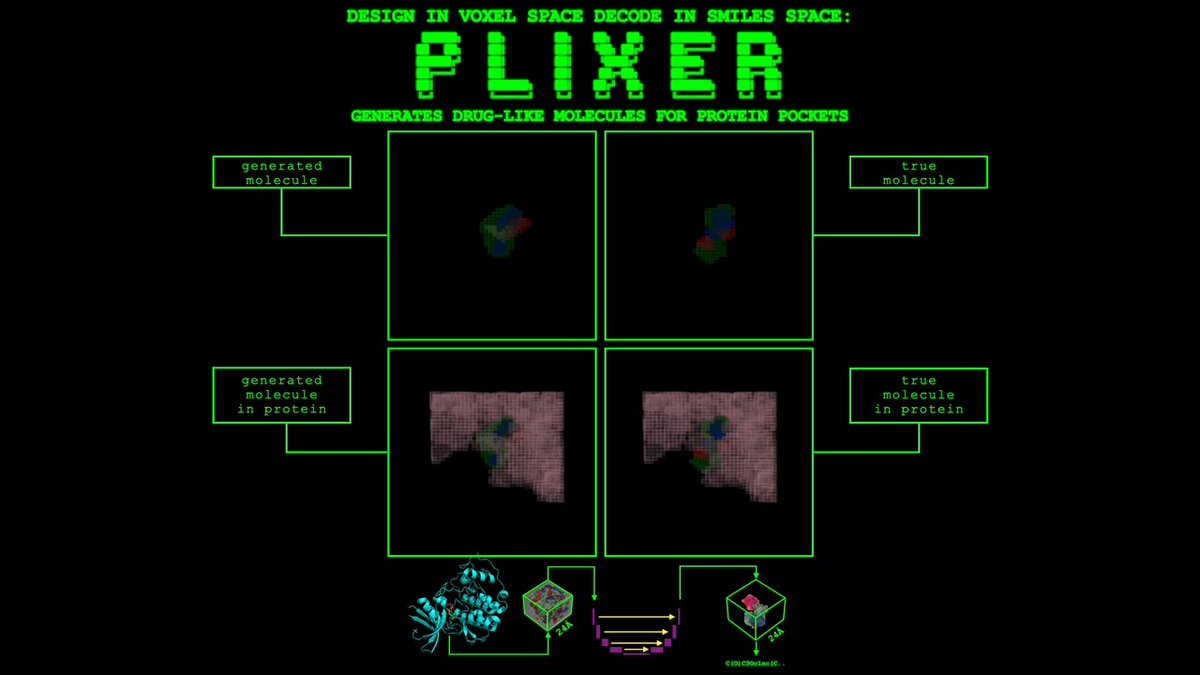

I'll be at the @genbio_workshop at #ICML2025 today, presenting my poster on Plixer, a generative model for drug-like molecules - given a target protein pocket, Plixer generates a voxel (3D pixel) hypothesis for the binding ligand before decoding it into a valid SMILES string 1/n

1

1

7

RT @dmmiller597: Come and join me, @_judewells, @_barneyhill, @jakublala and others in London on July 31st to hear some great flash talks o….

0

4

0

Nice.

We're backing the 'OpenBind' project, a new AI initiative announced at #LondonTechWeek that will build the world's biggest protein dataset right here in the UK. That means faster drug discoveries, new treatments for diseases like cancer, and cutting the cost of getting

0

0

2