Haim Sompolinsky

@HSompolinsky

Followers

5K

Following

5

Media

11

Statuses

57

@Harvard Professor of MCB & Physics and Director of Swartz Program in Theoretical Neuroscience; @HebrewU Professor of Physics and Neuroscience (Emeritus)

Joined July 2018

RT @Isaac_Herzog: בגאווה ישראלית גדולה בירכתי היום את פרופסור חיים סומפולינסקי מהאוניברסיטה העברית בירושלים על זכייתו בפרס Lundbeck היוקרתי….

0

22

0

RT @NatComputSci: We highlight a study by Ben Sorscher, @SuryaGanguli and @HSompolinsky in which they explore a quantitative theory of neur….

0

4

0

RT @SuryaGanguli: Our new paper @NeuroCellPress "A unified theory for the computational and mechanistic origins of grid cells" lead by Ben….

0

47

0

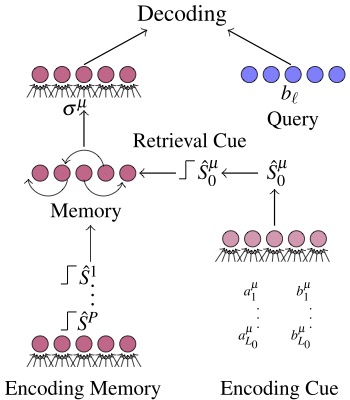

RT @SuryaGanguli: Our new paper in @PNASNews: "Neural representation representation geometry underlies few shot concept learning'' lead by….

0

66

0

Postdoc position in Sompolinsky Group: If you are interested in doing exciting postdoc research at Harvard at the forefront of computational neuroscience and the interface between natural and artificial intelligence, send application and 3 letters to hsompolinsky@mcb.harvard.edu.

2

62

129