Tomoyasu Horikawa

@HKT52

Followers

393

Following

1K

Media

12

Statuses

2K

Researcher

JAPAN

Joined January 2010

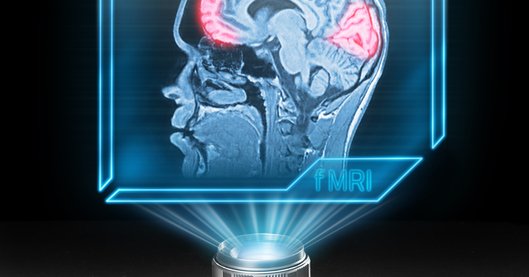

Our "mind captioning" paper is now published in @ScienceAdvances. The method generates descriptive text of what we perceive and recall from brain activity — a linguistic interpretation of nonverbal mental content rather than language decoding. https://t.co/Lt48p2pUYS

science.org

Nonverbal thoughts can be translated into verbal descriptions by aligning semantic representations between text and the brain.

Our new paper is on bioRxiv. We present a novel generative decoding method, called Mind Captioning, and demonstrate the generation of descriptive text of viewed and imagined content from human brain activity. The video shows text generated for viewed content during optimization.

3

23

77

【すごい】NTT、頭の中で「思い浮かべたこと」をAIでテキスト化する技術公開 https://t.co/L4NyEtx3sB 映像を見ているとき、あるいは過去に視聴した映像を思い浮かべた時の脳の状態を、脳の活動部位を特定できる「fMRI」で計測。どのような映像を見て、思い浮かべたのかをテキストで出力するという。

317

4K

18K

Our recent work on “mind captioning” was covered by CNN. Scientist turns people’s mental images into text using “mind-captioning” technology https://t.co/cGTmKW6gnR

cnn.com

A scientist in Japan has developed a technique that uses brain scans and artificial intelligence to turn a person’s mental images into descriptive sentences.

1

1

7

【新研究】マインドキャプショニング:言葉にならない思考を読み解く|山形方人 - 合成生物学は新たな産業革命の鍵となるか?|NewsPicks https://t.co/3ek435j3BU なぜ、この技術が注目されているのでしょうか。背景には、ChatGPTやGeminiのような生成AIが急速に社会に浸透している現実があります。

newspicks.com

「人が頭の中で何を考えているのか、それを文字として読めたら——」。長らくSF小説や映画の題材として描かれてきたこの問いが、いま現実の研究として形を帯び始めています。 NTTコミュニケーション科学基礎研究所の堀川友慈博士による研究で、11月5日にScience Advances誌(オープンアクセス)に正式に掲載された「マインドキャプショニング」(プレプリントとしては去年から掲示)は、脳活動から...

0

3

3

Brain Decoder Translates Visual Thoughts Into Text A new generative method called mind captioning can translate visual and recalled thoughts into coherent sentences by decoding semantic patterns across the brain. Instead of relying on traditional language regions, the system

11

102

292

A new technique called ‘mind captioning’ generates descriptive sentences of what a person is seeing or picturing in their mind using a read-out of their brain activity, with impressive accuracy. https://t.co/RcPIYm2t0F

nature.com

Nature - A non-invasive imaging technique can translate scenes in your head into sentences. It could help to reveal how the brain interprets the world.

40

344

1K

脳活動から見たり想像したりしている視覚内容を記述するテキストを生成する技術"Mind Captioning"に関する論文が@ScienceAdvances で公開されました. https://t.co/UVDHdE44iP ありがたいことにNature Newsにも取り上げてもらったのでご興味のある方はどうぞご覧ください. https://t.co/8J3yq0s5Rk

science.org

Nonverbal thoughts can be translated into verbal descriptions by aligning semantic representations between text and the brain.

ヒトが知覚・想像している映像の内容を説明するテキストを,脳活動から生成するデコーディング研究のpreprintが公開されました. fMRIで計測した脳活動から解読した意味特徴をもとに,テキストの繰り返し最適化を行うことで,脳に表現されている意味情報を捉えたテキストを生成するという研究です.

1

12

26

For more details, check the project page: https://t.co/jka6hGokXl Here, you’ll find access to data, code, and a Q&A section, along with more about our "mind captioning" work.

0

0

3

I also appreciate @maxdkozlov for the interview and @alex_ander for sharing his thoughts in the Nature News article on our work: "'Mind-captioning' AI decodes brain activity to turn thoughts into text." https://t.co/8J3yq0s5Rk

1

0

3

I'm deeply grateful to the reviewers for their thoughtful feedback — and to Niko @KriegeskorteLab for his open commentary, which led to further validation and discussion of our methods and clarified the semantic features' role in decoding mental content. https://t.co/YSnqSTKKy7

1

0

2

Mind captioning: Evolving descriptive text of mental content from human brain activity https://t.co/b1ggQ5YZZp

#biorxiv_neursci

0

9

16

ヒトが知覚・想像している映像の内容を説明するテキストを,脳活動から生成するデコーディング研究のpreprintが公開されました. fMRIで計測した脳活動から解読した意味特徴をもとに,テキストの繰り返し最適化を行うことで,脳に表現されている意味情報を捉えたテキストを生成するという研究です.

Our new paper is on bioRxiv. We present a novel generative decoding method, called Mind Captioning, and demonstrate the generation of descriptive text of viewed and imagined content from human brain activity. The video shows text generated for viewed content during optimization.

0

0

10

We used video stimuli collected by Cowen & Keltner (2017). All experimental data and code used in this study will be made publicly available via OpenNeuro and Github.

0

0

1

The method can be applied to mental imagery, allowing us to interpret mental content by translating brain signals into linguistic descriptions. This may lead to non-verbal thought-based brain-to-text communication, potentially aiding people with language expression difficulties.

1

0

3

While the semantic features were useful for predicting video-induced brain activity in the language network, intelligible descriptions were still generated even when this network was ablated, highlighting explicit representations of structured semantics outside this network.

1

0

2

We combined semantic feature decoding with a novel text optimization method assisted by a masked language modeling (MLM) model to iteratively optimize text. The generated text captured viewed content, achieving ~50% accuracy in identifying videos out of 100 (chance=1%).

1

1

3

Our new paper is on bioRxiv. We present a novel generative decoding method, called Mind Captioning, and demonstrate the generation of descriptive text of viewed and imagined content from human brain activity. The video shows text generated for viewed content during optimization.

Mind captioning: Evolving descriptive text of mental content from human brain activity https://t.co/b1ggQ5YZZp

#biorxiv_neursci

3

18

51