ChenHuajun@Zhejiang_University

@ChenHuajun

Followers

240

Following

48

Media

31

Statuses

78

Full professor of College of Computer Science of Zhejiang University (@ZJU_China), leading founder of https://t.co/p9Kucgjkj6,interested in #AGI,#KG,#LLM,#AI4Science.

Hangzhou

Joined November 2021

TextGrad: Symbolic Gradient vs Neural Gradient — A symbolic feedback optimization mechanism and an improved approach for neural-symbolic system integration. It offers potential for optimizing multi-agent workflows involving various black-box modules. Combining it with agent

1

0

3

OntoTune: Our #WWW2025 paper leverages hierarchical knowledge to guide LLM tuning, enabling the generation of responses guided by the ontology such as SNOMED CT. OntoTune: Ontology-Driven Self-training for Aligning Large Language Models. https://t.co/ey3Oy1ScdM

1

0

4

Human or LLM as Judger? Our #ICLR2025 paper, "SaMer: A Scenario-aware Multi-dimensional Evaluator for Large Language Models," introduce a fine-grained, scenario-adaptive evaluator that dynamically adjusts evaluation dimensions based on query context. As large models evolve,

1

1

6

Nikola Tesla once said, "It's the quality of thought, not quantity, that counts." ✍️

58

217

1K

Benchmarking Agentic Planning: Our #ICLR2025 paper "Benchmarking Agentic Workflow Generation" shows that LLMs struggle more with graph planning than sequential planning. We introduce WORFBENCH, an agentic workflow benchmark, and WORFEVAL, a corresponding evaluation protocol, to

1

2

9

MoE for KG: our #ICLR2025 paper proposes Mixture of Modality Knowledge Experts (MoMoKE) to address the representation learning problem in multi-modal knowledge graphs. https://t.co/Sd3eytn7SJ

1

0

11

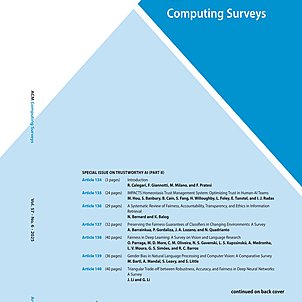

Our comprehensive survey on scientific large language models is now published in ACM Computing Surveys. Covering nearly 300 citations, it spans text, molecules, proteins, genomes, and single cells across biology, chemistry, materials, and medicine. https://t.co/P42MIyTPjI

dl.acm.org

Large Language Models (LLMs) have emerged as a transformative power in enhancing natural language comprehension, representing a significant stride toward artificial general intelligence. The applic...

1

0

6

Genes encode fundamental genetic information, forming the basis for RNA, proteins, and even complex biomolecular assemblies like CRISPR-Cas. With a gene foundation model such as Evo, a key question emerges: can genomic sequence signals alone enable the prediction and generative

A new Science study presents “Evo”—a machine learning model capable of decoding and designing DNA, RNA, and protein sequences, from molecular to genome scale, with unparalleled accuracy. Evo’s ability to predict, generate, and engineer entire genomic sequences could change the

0

0

1

DePLM: Denoising Protein Language Models for Property Optimization 1. The study introduces DePLM, a cutting-edge framework that refines evolutionary information (EI) in protein language models (PLMs) to enhance property optimization by filtering out irrelevant noise. 2. DePLM

0

10

48

"A good teacher does not teach facts, he or she teaches enthusiasm, open-mindedness and values." - Gian-Carlo Rota

51

598

3K

How can we improve LLMs' step-wise reasoning and planning ability? Our #EMNLP2024 paper proposes a framework that, echoing O1's multi-step reasoning, enhances LLMs by leveraging knowledge graphs (KGs) to synthesize step-by-step instructions. Just as chain-of-thought reasoning

0

1

4

The world has changed far more in the past 100 years than in any other century in history. The reason is not political or economic but technological — technologies that flowed directly from advances in basic science. - Stephen Hawking

34

99

392

Start from Zero: Triple Set Prediction for Automatic Knowledge Graph Completion (KGC): In our #TKDE paper, we redefine the KGC task by introducing "Triple Set Level" completion. Unlike traditional methods that predict missing elements at the single-triple level, our approach

0

0

4

Our #NeurIPS2024 paper, 'DePLM: Denoising Protein Language Models for Property Optimization,' leverages the denoising process from diffusion models to filter noisy information from protein language models. This approach enables the model to focus more effectively on predicting

0

0

7

Even Hiton himself doesn't quite understand how someone working on neural networks ended up winning the Nobel Prize in Physics. 😂

“How could I be sure it wasn’t a spoof call?” 2024 physics laureate Geoffrey Hinton received a phone call from Stockholm in the early hours in a hotel room in California. Multiple Swedish accents helped reassure him that his #NobelPrize in Physics, awarded today, was real.

0

0

1

Agent Planning with the World Knowledge Model (WKM). Our #NeurIPS2024 paper introduces WKM, an independent model that enhances large-model agents by synthesizing task and state knowledge from expert trajectories. Fine-tuned from a small base model, WKM applies a process

1

1

12